Control ingress to AKS with Azure API Management

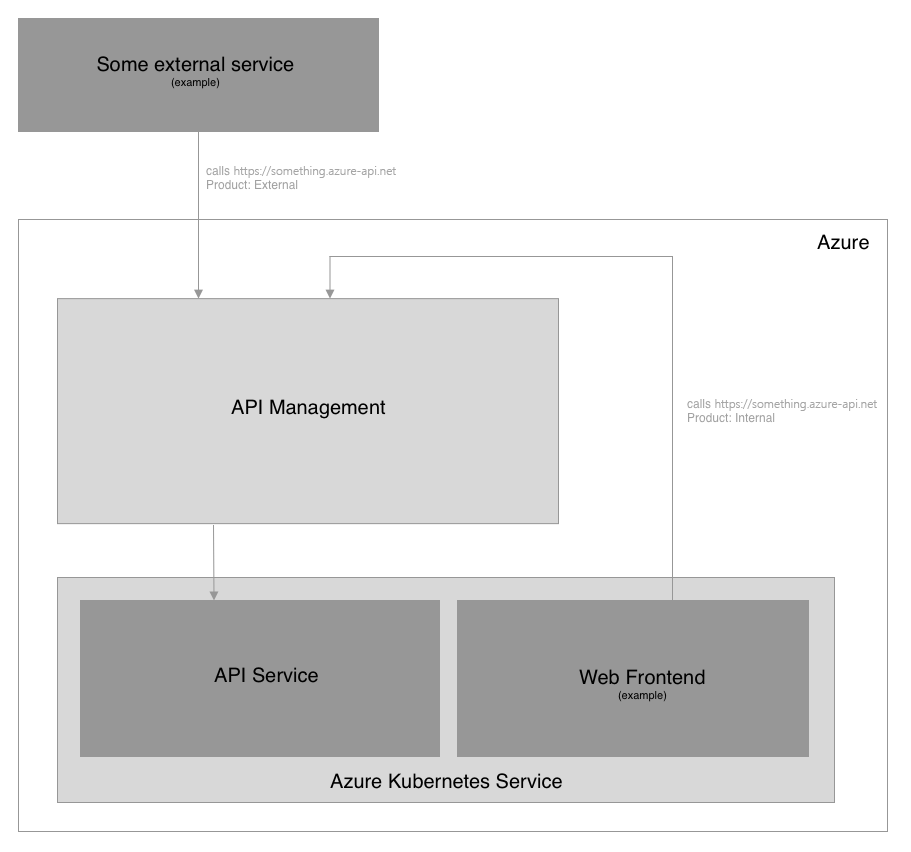

In this blog post, we will discuss, how to use Azure API Management as an ingress point for AKS services, that are not exposed publically and how other services in the Kubernetes cluster can use the same API Management instance to communicate with these APIs while leveraging the power of API Management’s policies even for internal requests.

Why would you want that?

Well, if you have a bunch of transformation and throttling rules already configured in your public facing API Management, you might want to re-use the same rules for internal communication. Setting up a second API Management just for internal use and synchronizing all configs is not the best option obviously.

If you want to follow along but don’t have an application at hand to test this on, feel free to use my tiny GitHub sample, that I also used for this post.

Connect API Management to your AKS Network

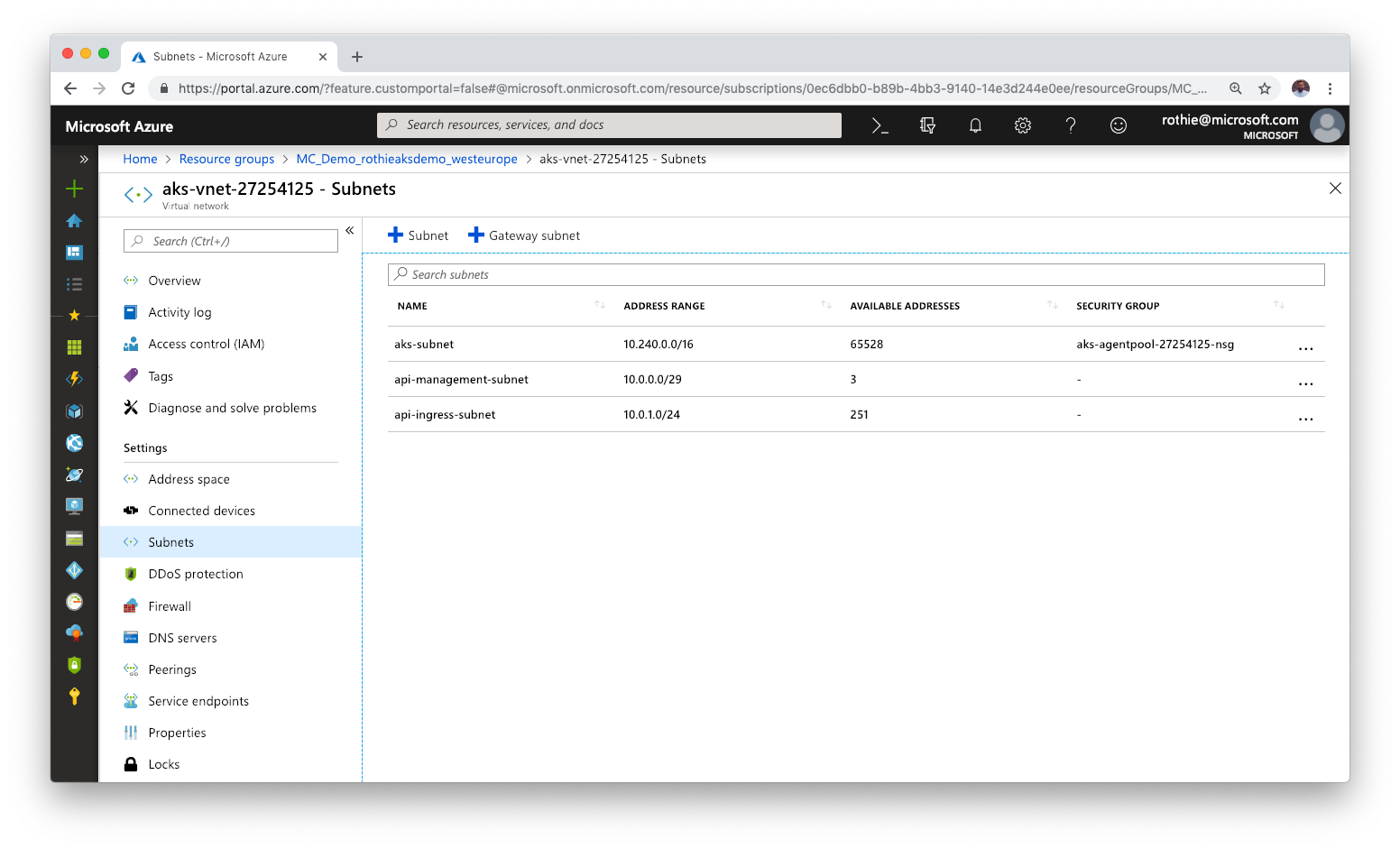

Assuming you have just created the AKS cluster and an API Management instance in Azure, we need to set up the networking first. Navigate to the Resource Group that has been created for your AKS resources and open the Virtual Network that hosts your nodes. Make sure to add two additional subnets. One for the API Management instance and one for the API service in Kubernetes.

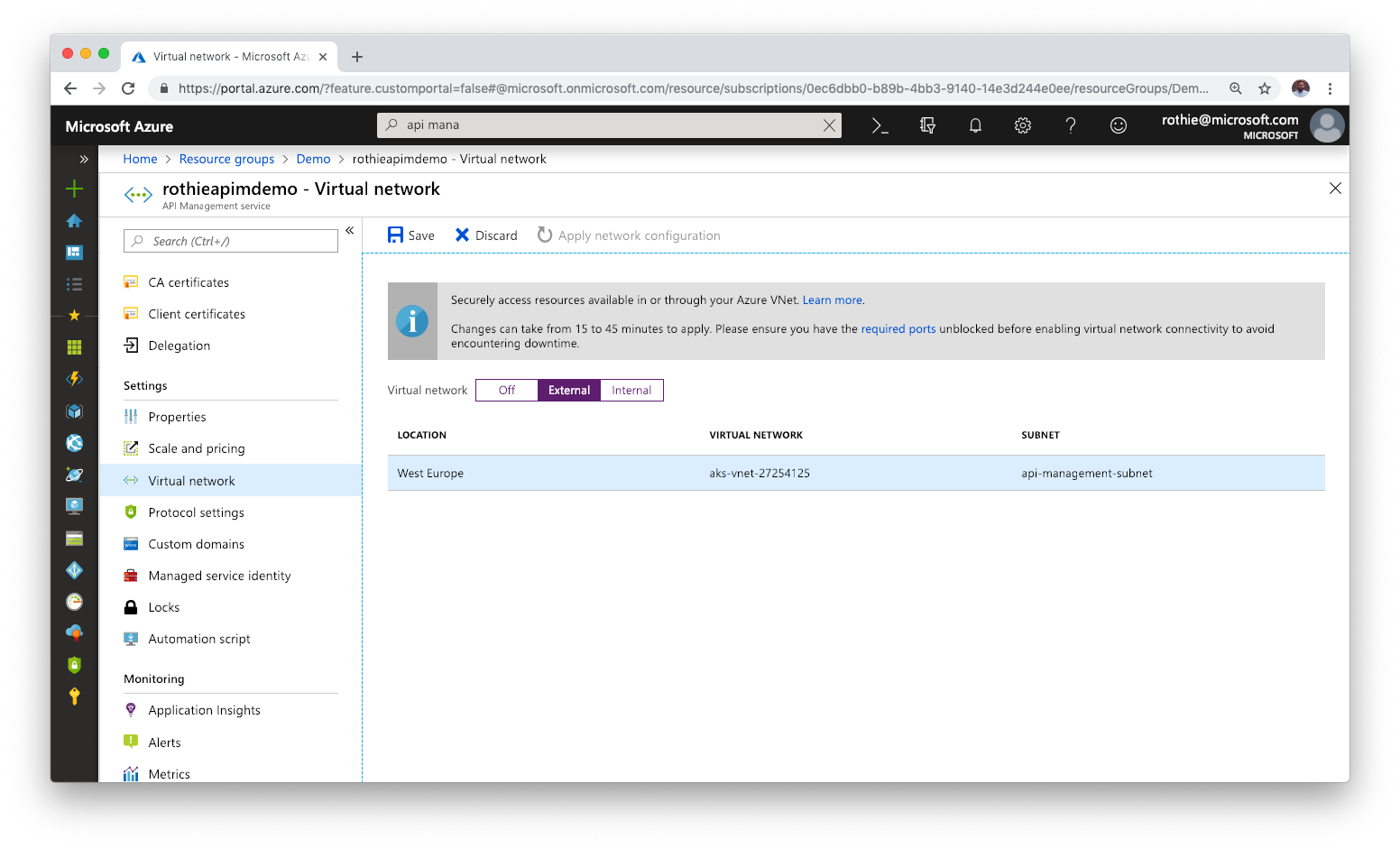

Now we need to ensure, that your API Management instance can talk to that VNET. So open your API Management in Azure, navigate to the Virtual Network settings and a new external network connection to the network in which we just created the subnet for API Management.

Note, that not all of the API Management pricing tiers have VNET support!

Next, we want to make sure that the Kubernetes Service exposes the API service to the other subnet we created. To ensure that, we can use the service.beta.kubernetes.io/azure-load-balancer-internal annotations as described in the documentation. The service description in your manifest should be of type LoadBalancer and now look like this:

kind: Service

apiVersion: v1

metadata:

name: api

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

service.beta.kubernetes.io/azure-load-balancer-internal-subnet: "api-ingress-subnet"

spec:

type: LoadBalancer

selector:

app: api

ports:

- protocol: TCP

port: 8080

targetPort: 8080

Hint: In case you are facing traffic spikes with this solution, it is probably not the API Management but more likely the additional hops from node to node in Kubernetes internally, if not all nodes host your service. To solve this, you can use

NodePortinstead ofLoadBalancerand add additional traffic policies inside the Kubernetes Service.

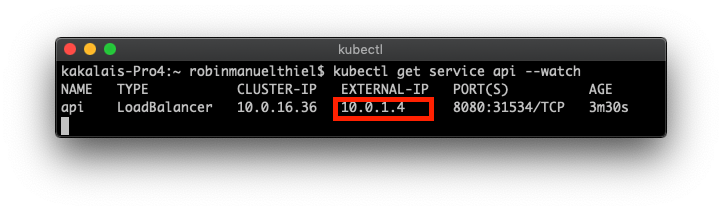

After the deployment, we should see the VNET IP address of the API service using the kubectl get service api command. This is the IP address we can use, to configure API Management.

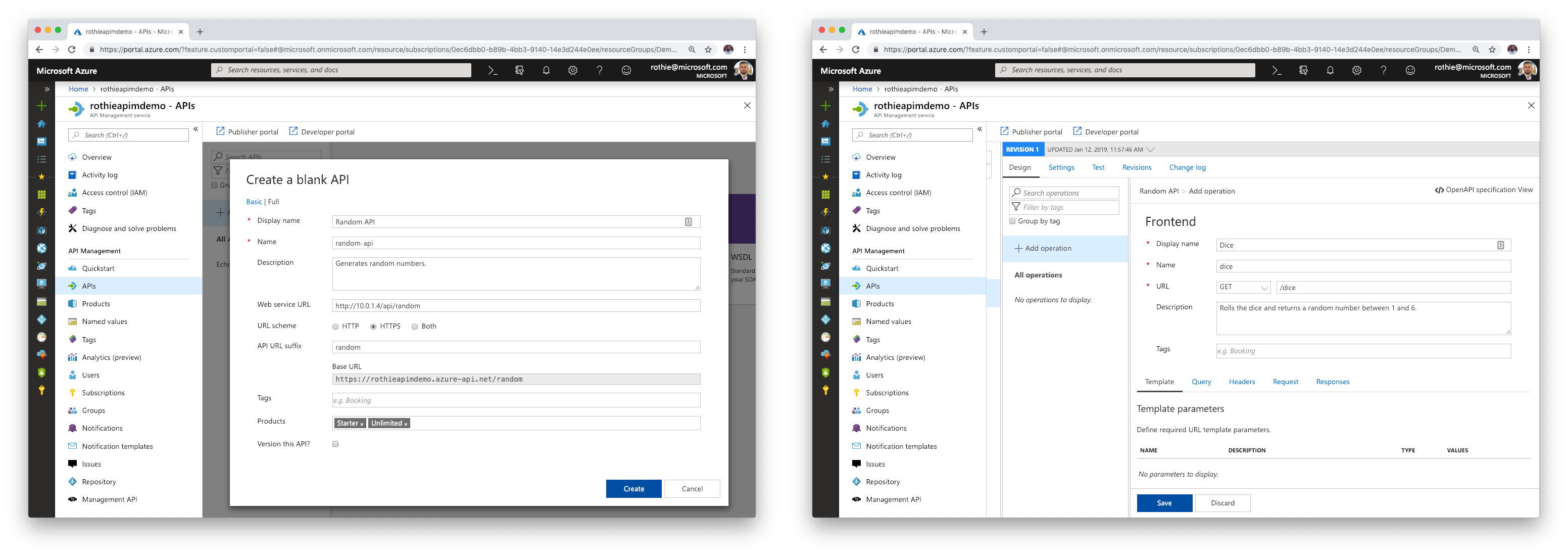

Configure API Management

Create a new API in your API Management instance and use the service’s IP address as the Web service URL in the configuration blade.

Call the API from other AKS services through API Management

If you want to use the same API Management logic for internal requests to your APIs too, you can do that. I would recommend creating separate Products for internal and external requests if you want to avoid throttling for internal calls for example.

As Azure handles network traffic with “cold potato routing”, you will not leave the Azure data center’s network when calling one Azure service from another in the same region. That means if you call https://your-api-management.azure-api.net from one of your services in your AKS cluster, the request will be handled by Azure internally without leaving the data center.

A full Kubernetes manifest file with a Web Frontend that gets published externally and an API, which only exposes itself to the AKS VNET but is reachable through API Management could look like this:

#######################################################

# API #

#######################################################

kind: Deployment

apiVersion: apps/v1beta1

metadata:

name: api

spec:

replicas: 3

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

minReadySeconds: 5

template:

metadata:

labels:

app: api

spec:

containers:

- name: microcommunication-api

image: robinmanuelthiel/microcommunication-api:latest

ports:

- containerPort: 8080

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

---

kind: Service

apiVersion: v1

metadata:

name: api

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

service.beta.kubernetes.io/azure-load-balancer-internal-subnet: "api-ingress-subnet"

spec:

type: LoadBalancer

selector:

app: api

ports:

- protocol: TCP

port: 8080

targetPort: 8080

---

#######################################################

# Web Frontend #

#######################################################

kind: Deployment

apiVersion: apps/v1beta1

metadata:

name: web

spec:

replicas: 1

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

minReadySeconds: 5

template:

metadata:

labels:

app: web

spec:

containers:

- name: microcommunication-web

image: robinmanuelthiel/microcommunication-web:latest

env:

- name: RandomApiHost

value: "YOUR_API_MANAGEMENT_GATEWAY_URL/random"

- name: RandomApiKey

value: "YOUR_API_MANAGEMENT_SUBSCRIPTION_KEY"

ports:

- containerPort: 80

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

---

kind: Service

apiVersion: v1

metadata:

name: web

spec:

type: LoadBalancer

selector:

app: web

ports:

- name: http

port: 80

This has been taken from a super tiny sample application, that I have published on GitHub.